This article was published in 2016, and has been republished here with minor modifications. The interesting thing is that, despite the constant change in the world of education technology, these notes on assessment still stand. All I’ve done is get rid of a defunct link, and flesh out some of the thinking behind “Key characteristics”.

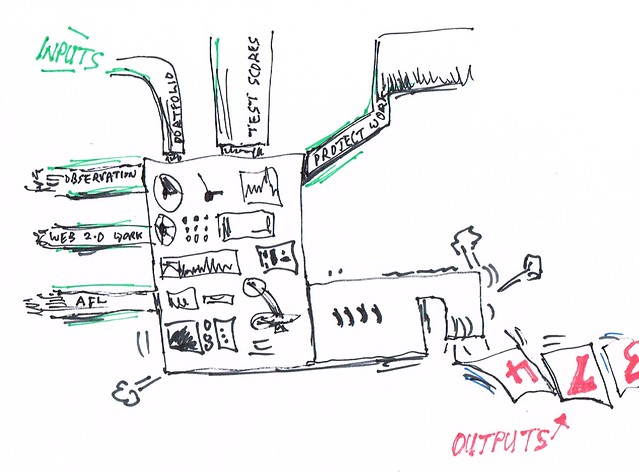

The assessment machine, by Terry Freedman. If you don’t know what’s going on, you cannot have faith in your evaluations.

Goodbye to all that

From 1989, when the National Curriculum was born, up until 2012, when they were declared not fit for purpose, levels were the default language for any discussion about how pupils were doing. At first, they worked really well. Not only did they provide a common language, but there were objective benchmarks in the form of Level Descriptors. When someone declared that Bill was a ‘Level 4 in ICT’, everyone had a good idea of what Bill knew and could do – and if they didn’t, all they had to do was look it up.

However, there were several problems with levels. First, teachers, pupils and parents tended to become fixated on the numbers and letters, rather than what they meant. That is to say, the conversation was about how to get from one level to the next, rather than how to improve one’s understanding of the subject.

Secondly, there often tended to be no equivalence between the levels given by one school and those identified by another. A level 4, say, could mean quite different things to different people, despite the fact that there were exemplars of pupils’ work at different levels on the National Curriculum in Action website.

One of the things we at the Qualifications and Curriculum Authority did to help address that was to come up with a set of what we called “Key Characteristics” for each level.

What that meant was, if you were not entirely sure whether someone was at a level 4, say, or a level 5 (because there was quite a bit of overlap, especially at the higher levels), one way to decide was to check whether the pupil was able to not only conclude whether or not some data was plausible (level 4), but also whether it was accurate (level 5). It seems a bit arbitrary but there was deep thinking behind such delineations. Deciding whether something is plausible may come down to gut feeling. Deciding whether it is accurate moves beyond intuition, and looks for further data, possibly even devising some data validation formulae or code to help. (Clearly, these days such an approach might be used when evaluating pupils’ responses to information which purports to be factual.

Thirdly, the levels were originally intended to be used for end of Key Stage assessment – but then they began to be used between stages. Many schools started to use levels for formative assessment, some even going so far as to insist that teachers assign a level to each piece of work – a purpose for which they had never been intended.

A new opportunity

Although it may not seem like it then, the decision to ditch levels was in fact a good thing. The main consequence of this for teachers – aside from causing them to panic – is that they can start to have conversations about what assessment is for, how best to measure it and how to report it in such a way that pupils know what they need to do next, and parents know how their child is getting on.

In other words, teachers have been given permission to start with an almost completely blank sheet and begin to exercise their professional judgement. Why ‘almost’ completely blank? Because when evaluating new approaches to assessment, you will have to check whether the ones you and your team favour tie in with the school’s assessment policy. Here are five places to look for examples of approaches to assessment:

1 In 2014, the DfE published the results of a competition to find schools that were adopting innovative approaches to assessment. I took those and evaluated them in terms of how they might be applied to the subject of computing. To see the articles I wrote about that, start at this link.

2 Join the Computing at School (now the National Centre for Computing Education) site, where teachers share their work there and invite comments. You may not have to reinvent the wheel entirely.

3 If you are on Facebook, apply to join the ICT and Computing Teachers group. It’s not just for secondary teachers, but the discussions about resources and approaches are very interesting.

4 Another group on Facebook to consider is KS3 Computing. Again, this is a closed group, so you will have to apply to join (note that neither of these Facebook groups is concerned solely with assessment).

5 And finally, there is that old standby Google, or your alternative search engine of choice.

20 questions

Even now, many schools have not yet decided on what their ‘post-levels’ assessment approach should be. Some have even chosen to map the new programme of study to the old levels, but this won’t work as they simply don’t match up. When looking at different approaches to assessing computing, it’s worth using the following checklist to evaluate them (points 1, 2, 4 and 5 are similar to those espoused in the report on Assessment without Levels, which is available here). Several questions are especially pertinent if you are thinking about buying a third party application to help you work out a pupil’s ‘level’ (for want of a better shorthand term), or of creating one yourself.

I’ve numbered the points here for ease of reference, not to indicate order of importance. The list is not intended to be adhered to absolutely rigidly, but to provide a starting point.

1 Does the approach tie in with your school’s assessment policy?

2 If only some of it does, how will you ‘plug the gap’?

3 What is going on under the bonnet? In other words, how is it working out the results it produces? It may not be easy to obtain this information directly from the supplier, but try to ascertain how it’s working.

If it appears to be making unwarranted assumptions, using data unrelated to learning in your subject, or is simply not valid or reliable, move on. ‘Valid’ is intended to mean, does it test what it says it’s testing (as opposed to, say, common sense or general knowledge)? ‘Reliable’ is intended to mean, does it give consistent results? If it gives you a different result every time you use it, forget it.

4 Is the (implied) approach credible?

5 Is it good value for money?

6 Is it over-engineered? In other words, does it simply try to do too much, and a lot more than you really need it to?

7 Is it under-engineered? In other words, does it offer anything more than you could create for yourself by using Excel?

8 If it is free, is it supported?

9 Does it support teacher input and/or judgement?

10 Some progression grids may not use the term ‘levels’, but somehow look suspiciously like them. One question to ask is, how is this different from the old system?

11 Some progression grids divide the assessment criteria into the strands ‘IT’, ‘computing’, ‘digital literacy’, and ‘e-safety’. Is it entirely helpful to separate the strands out, as opposed to creating integrated tasks? This would be a good question to consider if your approach consists of giving pupils project work.

12 Is there an underlying assessment taxonomy? You know, like Bloom’s, or a variation of Bloom’s. Because if there is, you need to know whether it’s a framework you agree with. If the underlying approach isn’t to your liking, the data it generates is not likely to be either. (It’s worth noting that taxonomies or frameworks aren’t neutral. There’s more information about taxonomies here: Assessing without levels.)

13 Is there an underlying computing or ICT taxonomy? In other words, is the approach based on a particular view on the (assumed) difficulty of computing concepts? For example, if it ‘thinks’ a particular concept or skill is exceptionally difficult, how does that tally with your own views and crucially, the computing programme of study?

14 Does it actually cover the relevant parts of the programme of study?

15 Are the levels equivalent in some sense across the strands? If so, why? If not, is it still meaningful?

16 Would it be useful to have a set of ‘key characteristics’ of the kind mentioned earlier?

17 Does it make sense?

18 Would it be easy to implement?

19 Would it help teachers, students and parents to understand what the student knows, understands and can do?

20 Would it enable ‘next steps’ for the student to be worked out?

One final point – document your journey. Ofsted has said it will understand if schools haven’t fully implemented a new assessment regime in these early stages. (Update note: five years on, I should imagine that Ofsted would be puzzled if a school hadn’t made significant progress by now.) But even if that’s the case, inspectors will still want to know what you are doing about it, and how you are assessing pupils in the meantime. Ultimately, clear, accurate and fair assessment is essential if students are to make good progress.

5 useful apps for recording what pupils do

Explain Everything

For recording and annotating recordings

Animoto

For creating videos

Socrative

Great for asking questions and analysing the answers and getting reports. Think student response system, but not tied to a particular operating system or device.

Google Docs

This is a great program for encouraging pupils to collaborate and contribute. You can look at the page histories and see who has contributed what.

Puzzle maker

For creating various kinds of puzzles and quizzes. Great for assessing low-level thinking skills or for quick formative assessment.

Having read this article, would you not agree with me that most if not all of it is still relevant?

This article first appeared in Teach Secondary. Here’s the link in case you don’t believe me: Marking Time – ICT Assessment in a Post-levels World.

If you found this article useful, there are several things you could do, which are not mutually exclusive:

Ask me to run a course for your Computing team, to go through different models of assessment, especially in Computing and ICT. My approach is to look at different options, and leave it to course participants to decide which approach, or combination of approaches, would work best for them and in their context. You can find out what others have said about my assessment courses by reading the course testimonials.

Commission me to come in and look at your approach to assessing Computing so I can give you an independent and therefore honest appraisal of it.

Hire me to write articles about assessment for your blog or website or publication.

If none of these seem quite right for your needs, look at the services I offer.

My contact details may be found here: Contact me.