Efficiency? Don't Make Me Laugh!

One of the reasons always advocated for adopting technology is that it leads to efficiency gains. In other words, it (supposedly) helps you do what you already were doing, but faster, cheaper or easier.

I wonder if this is actually true, as opposed to a convenient white lie with which we console ourselves? Has anybody undertaken a Total Cost of Ownership type of analysis which looks not only at the financial costs of ownership but also the social and economic costs? If anybody were to do so, I imagine we would have to re-evaluate our investment in time, energy and money into technology.

This may sound an odd way to open an article by an educational ICT specialist (and one who loves technology). But I think these issues should be addressed.

Take three examples. Firstly, I've been working on a couple of presentations. Each presentation will last an hour. I have to allow ten minutes for questions, so once I've allowed for five minutes at the start being introduced and saying 'Good Morning', I'm left with 45 minutes to fill. That is, a total of an hour and a half.

So far, preparing for these presentations has taken nearly three days.

Part of the reason is that I like to be well-prepared. As the old saying goes, you don't get a second chance to make a first impression, so I always like to have the problem of trying to pack in as much as I can rather than the opposite one of hoping that nobody will notice if I start talking incredibly slowly in order to drag it out.

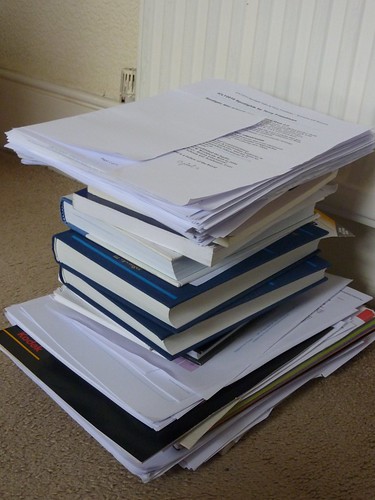

A little light reading...But another part of the reason is that it is now so easy to keep finding new sources of information to read and consider. The photograph represents the amount of reading I've done so far. Admittedly, this includes a few books, but the rest of it is, by and large, printed from the internet. In fact, only this morning I accidently came across yet another article I think I ought to read.

A little light reading...But another part of the reason is that it is now so easy to keep finding new sources of information to read and consider. The photograph represents the amount of reading I've done so far. Admittedly, this includes a few books, but the rest of it is, by and large, printed from the internet. In fact, only this morning I accidently came across yet another article I think I ought to read.

Now, if I'd had to do all this research in my local library, as I used to before the web was invented, I would have stopped long ago. There comes a point where the likely benefit of reading one more article is outweighed by the cost (however measured) of acquiring it. That point is reached much sooner when you have to physically move from your home to a library (and possibly cart a load of stuff home afterwards).

What we have here is a modern manifestation of Say's Law, which states that supply creates its own demand. The reason I'm acquiring all this reading material is that it's so easily available. Perhaps too easily available.

Another example is in the field of writing. It is so easy to rewrite stuff that it's almost impossible to exercise the self-discipline required to say "That is now good enough." When trying a different turn of phrase, or looking up a nice quotation, or finding an appropriate illustration (or, in my case for this article, actually creating one) is so easy, the temptation is to keep on and on tweaking in the vain hope of achieving perfection.

Thirdly, lesson preparation has been made far more time-consuming now than it ever used to be, for all the reasons I've rehearsed so far. Worksheets and other resources tend to be more interactive, colourful, neat and engaging than ever. But I wonder if the gains in terms of students' learning are enough to justify the huge increase in person-hours that computer availability has led to?

(I'm sure I won't be the only person to be able to recount that some of my best lessons were the ones I thought of on the way in to work or even, sometimes, the ones in which I allowed a chance comment or question by a student dictate the content of the entire lesson, because the moment was there and had to be seized.)

Being able to answer such questions does not mean advocating a return to the steam age. But it strikes me that it would represent a much more honest appraisal of the relative costs and benefits of access to computer technology.

This is important, for two sets of people: teachers, because it's a workload issue, and students, who need to learn how to evaluate the effectiveness of technology. They should understand such matters both because it's all part of being digitally literate, and because otherwise it will become a workload issue for them too.

What all this boils down to is an economics argument. We tend to work, especially in education, on the basis of the well-known saying, 'If a job is worth doing, it's worth doing properly.'

This is almost never the case.

Think of it in terms of exam grades. If you advise your students to work as hard as they can to attain a grade A in your subject, the extra hours they spend doing so could mean their obtaining a lower grade in other subjects. Looked at from a higher perspective, what's better: one grade A and four grade Gs, or two grade Bs and three grade Cs?

Think of it in terms of lesson preparation. If you prepare one group's lessons absolutely perfectly, at the cost of being almost completely unprepared for all the others, would that be acceptable?

At the risk of sounding as if I'm advocating low standards (which I most definitely am not), I think we have to try to develop, and inculcate into students, the idea of 'good enough'. It's OK for things to be 'good enough': they don't have to be perfect.

Being a techno-addict (a boy who likes his toys), I imagine a technological solution. Perhaps a macro that will actually prevent you from working on a document any longer once a particular length of time has been spent on it. It would be easy to write.

Indeed, there is a free text editor that does something similar. Write or Die seeks to cure writer's block or slow writing by becoming more and more noisy and unpleasant as time limps on. It's almost impossible to continue in the circumstances it creates.

A technological solution to a problem caused by our inability to exercise self-discipline in our use of technology? I like it!